Super-LiDAR Resolution with Software

Learn how to increase the resolution of your LiDAR output without adding additional processing time by making use of the Outsight preprocessor software.

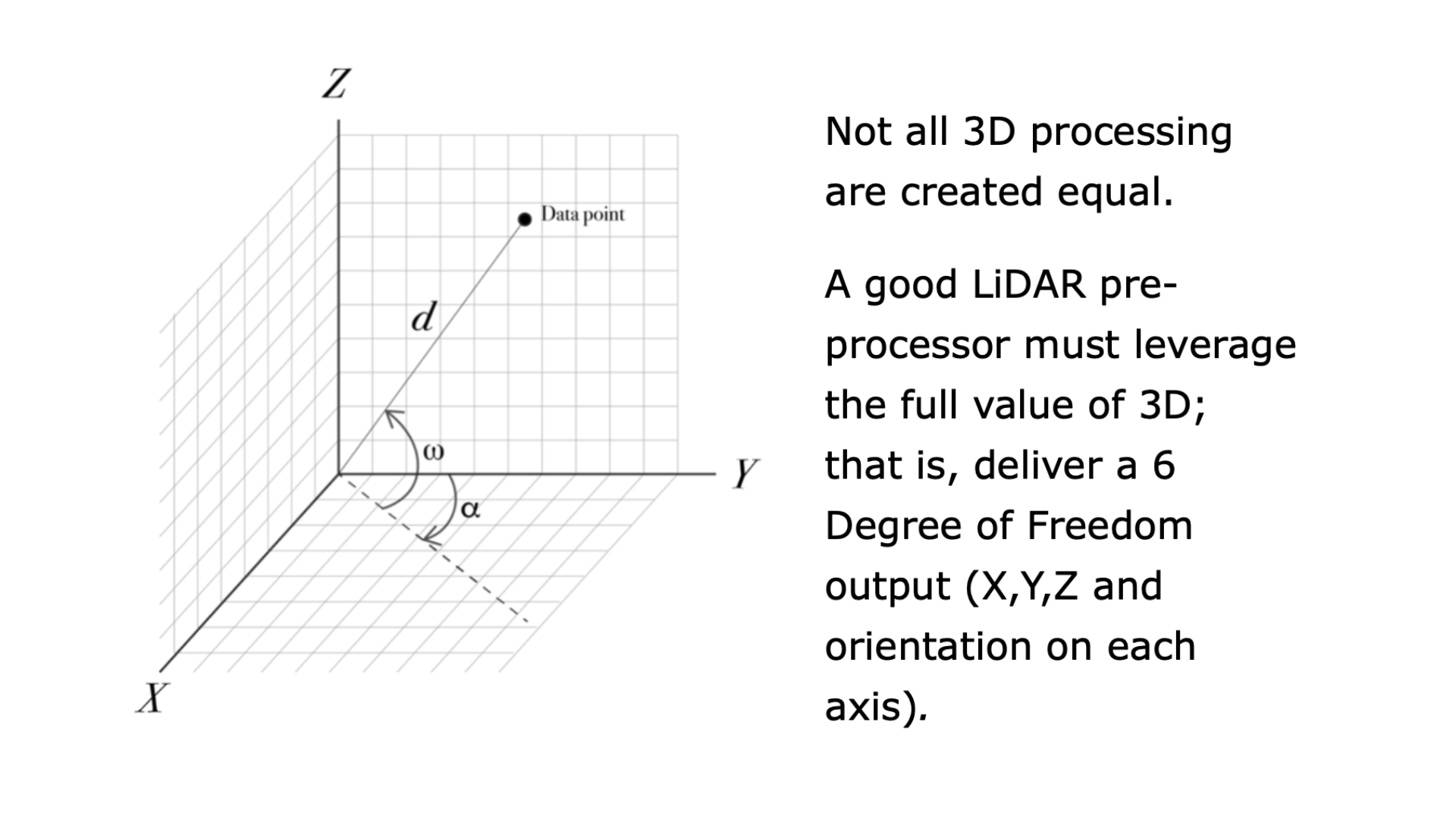

3D Lidar is not "Image + Depth"

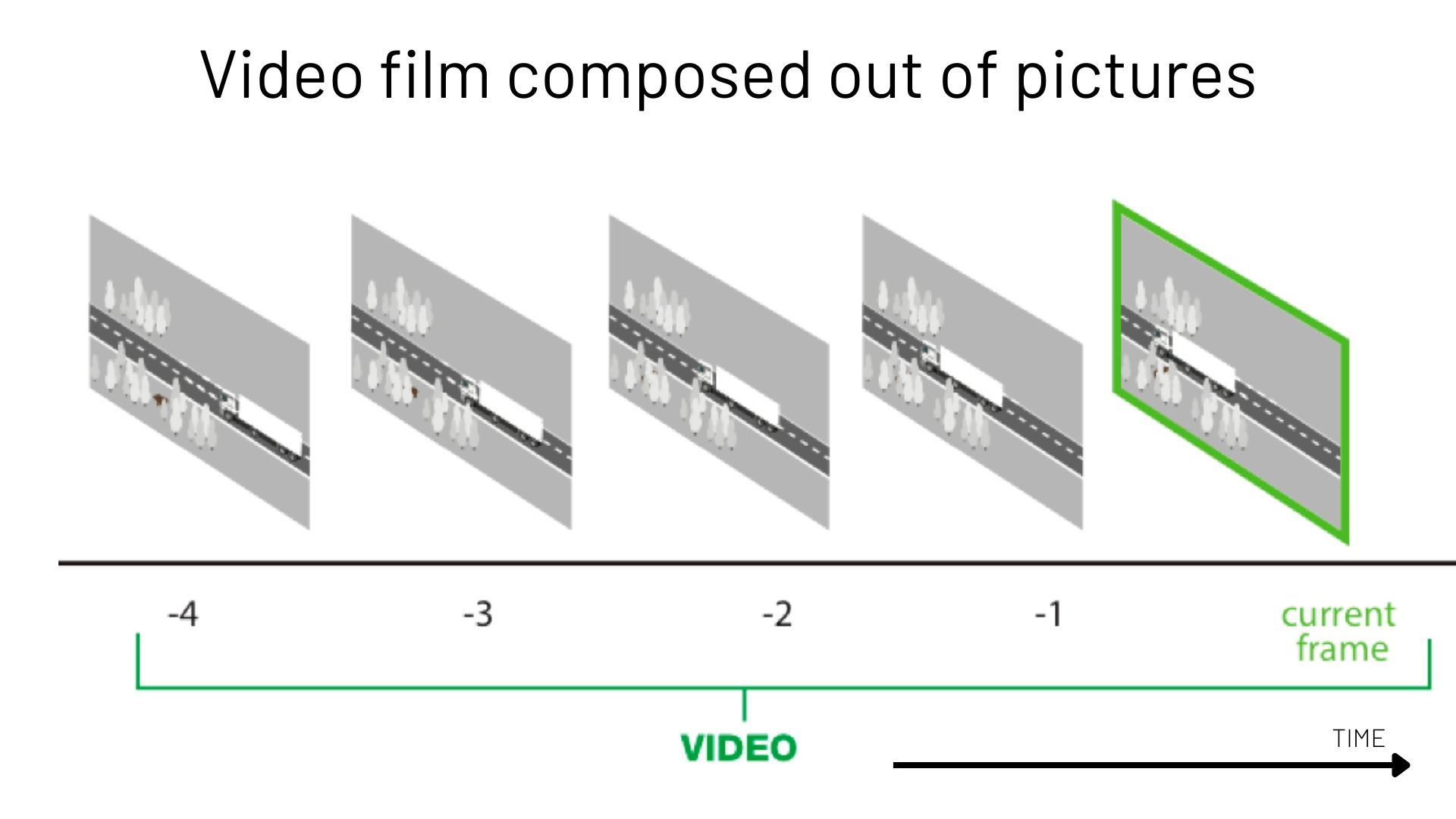

In comparison to a single photograph, a video film (a rapid succession of pictures at a specific frame rate) allows for a better interpretation of the situation:

This is partially because the human brain will interpolate information and use movement to add sense to the perception.

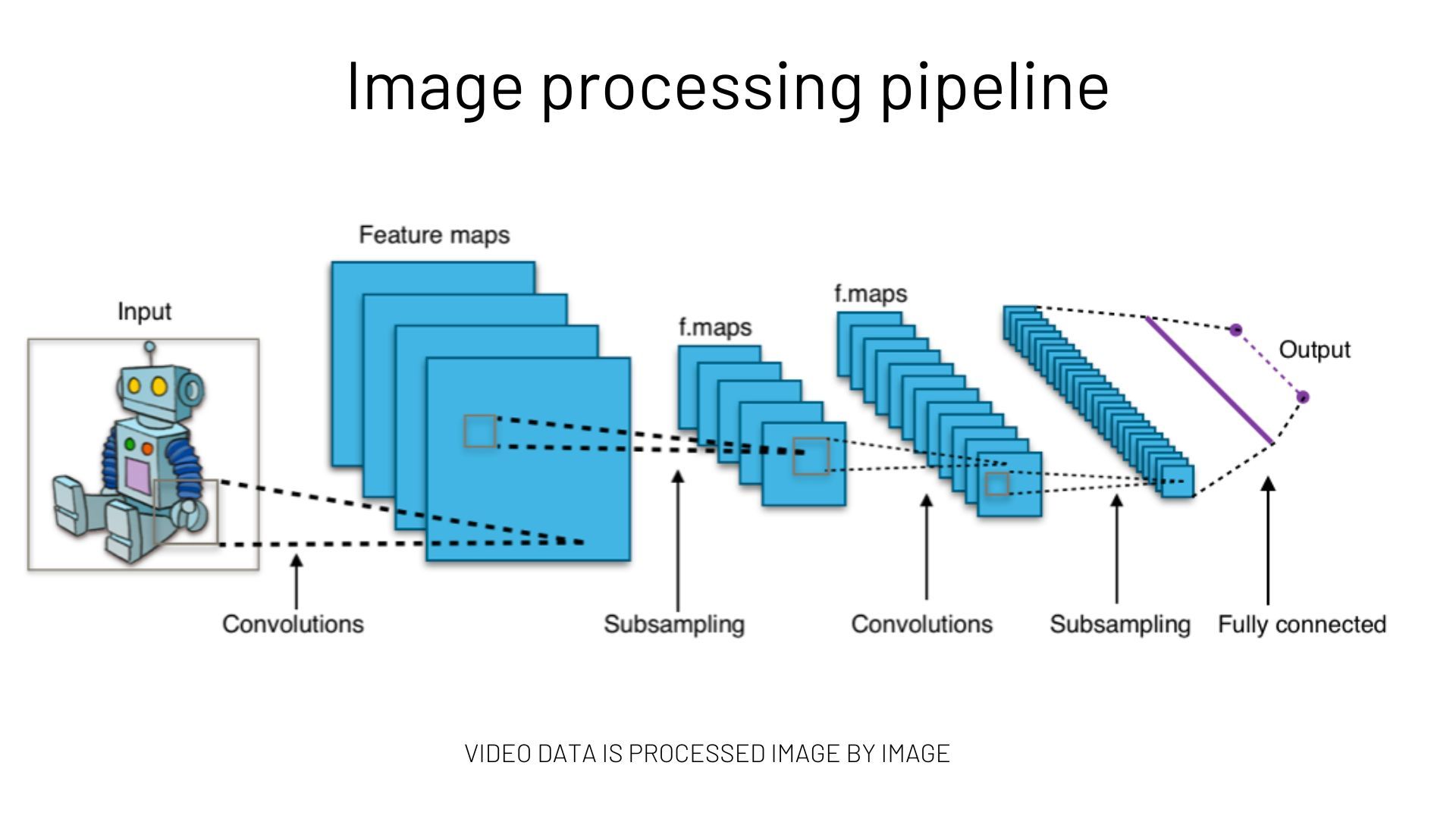

However, the typical pipeline for processing Video streams from Cameras, especially when using Machine Learning approaches, is based on analysing successive individual Images.

When 3D LiDAR first appeared, a natural first approach was to apply the same image processing techniques to this new type of data, for example treating depth as an additional colour (RGB-D with D = Depth).

This method delivers poor results (and requires significant processing resources) as it does not take advantage of the unique properties of 3D LiDAR data and the Spatial Information that it natively contains.

Worse than Red Fish memory

When analysing the instantaneous perception of the environment around the sensor, processing LiDAR frames (the 3D equivalent of Images) one by one is equivalent to forgetting the past: your sensor discovers the world as if it first magically appeared in the current frame.

You'll give away information as quick as you'll add it (or even faster).

Even Red Fishes don't forget so quickly! (and by the way, they're smarter than you think)

Of course there is information on each 3D frame, but

- there is also rich information in the relationship between the current frame and the previous ones (context and association).

- more importantly, remembering how the world was before the current frame arrived reveals a meaningful part of reality (signal) that can only be distinguished from noise when time is taken into account (multiple frames).

Keep reading if you want to see some specific examples below.

Increasing resolution and processing power is not a magic wand

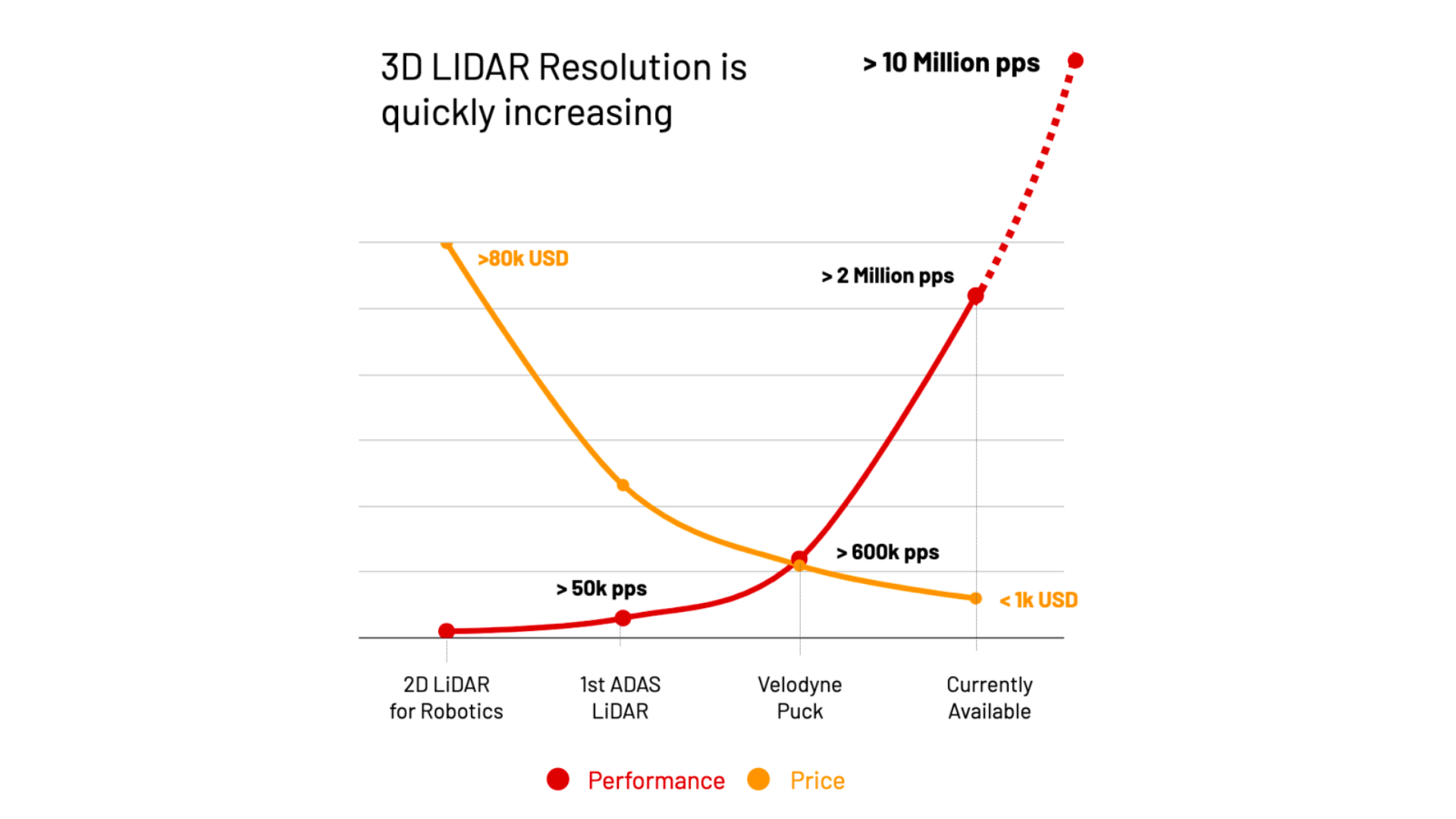

As we mentioned in a previous article, 3D LiDAR is on an incredible trend, with prices dropping while performance rises.

This will become even more interesting as the expected improvement of performance, measured in points per second, will soon show a five-fold enhancement and reach double digit millions:

This presents a number of challenges, including how to process such a large amount of data in real time (hint: using 3D pre-processing software), but it doesn't solve the problem of instantaneous memory loss.

Smaller objects will be detected, but not smaller changes.

Super-Resolution based on high-performance SLAM

Simultaneous Localisation and Mapping (SLAM) refers to the ability of a sensor, in this case 3D LiDAR, to know its own position and orientation in a map as it is being built.

It's one of the basic features that performs a 3D Software pre-processor such as Outsight's.

The output of the SLAM feature is typically used for Localisation purposes, including understanding the precise movement and velocity of the Sensor itself (Ego-Motion).

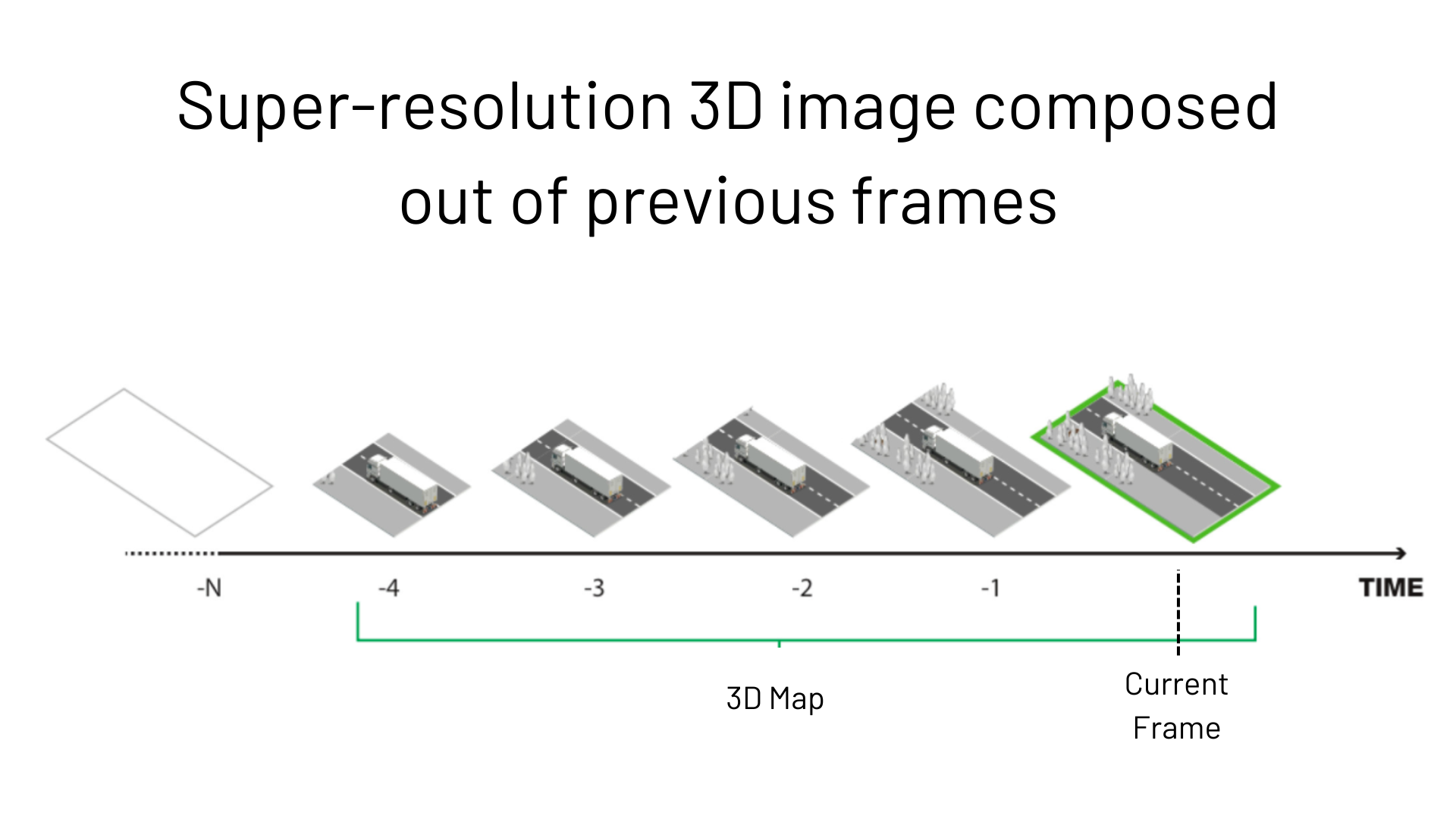

However, because the Ego-Motion output provides the precise relationship between successive frames (the relative position and orientation), it also allows generating a Super-resolution 3D image (a Map) in real-time, the equivalent of what a Video stream is to an Image:

In this case, the goal isn't to use the 3D map for cartography, but to increase the resolution as the sensor (or the objects around it) moves.

What it usually took days, it's now possible with Outsight's pre-processing software in less than 30 seconds:

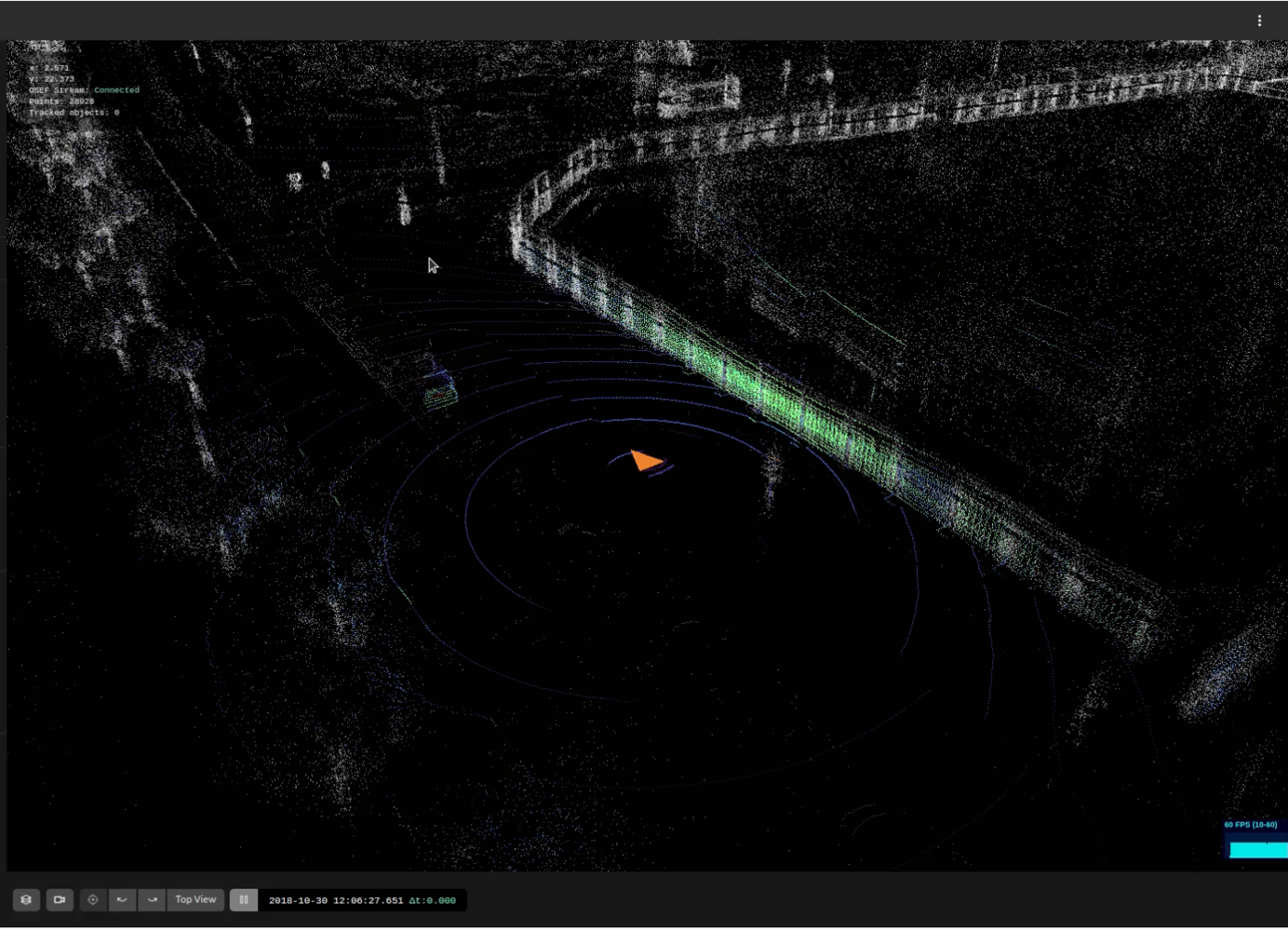

Consider this example. The image below is what you would get with a currently-available 3D LiDAR of significant resolution, in one frame:

Now consider what happens when a customer of Outsight uses the Super-Resolution slider in the real-time web interface:

The same situation becomes much clearer - if it's true for you, it's true for any computer vision system that uses this integrated real-time signal instead of the instantaneous frame:

3D LiDAR with Outsight processor output super resolution

This is not only relevant for LiDARs using repetitive scanning patterns, software real-time super-resolution adds value also in non-repetitive approaches:

Super resolution demonstration in real-time

Let's take a look at what this means in practice, with a real-world example.

Detecting invisible road debris and obstacles

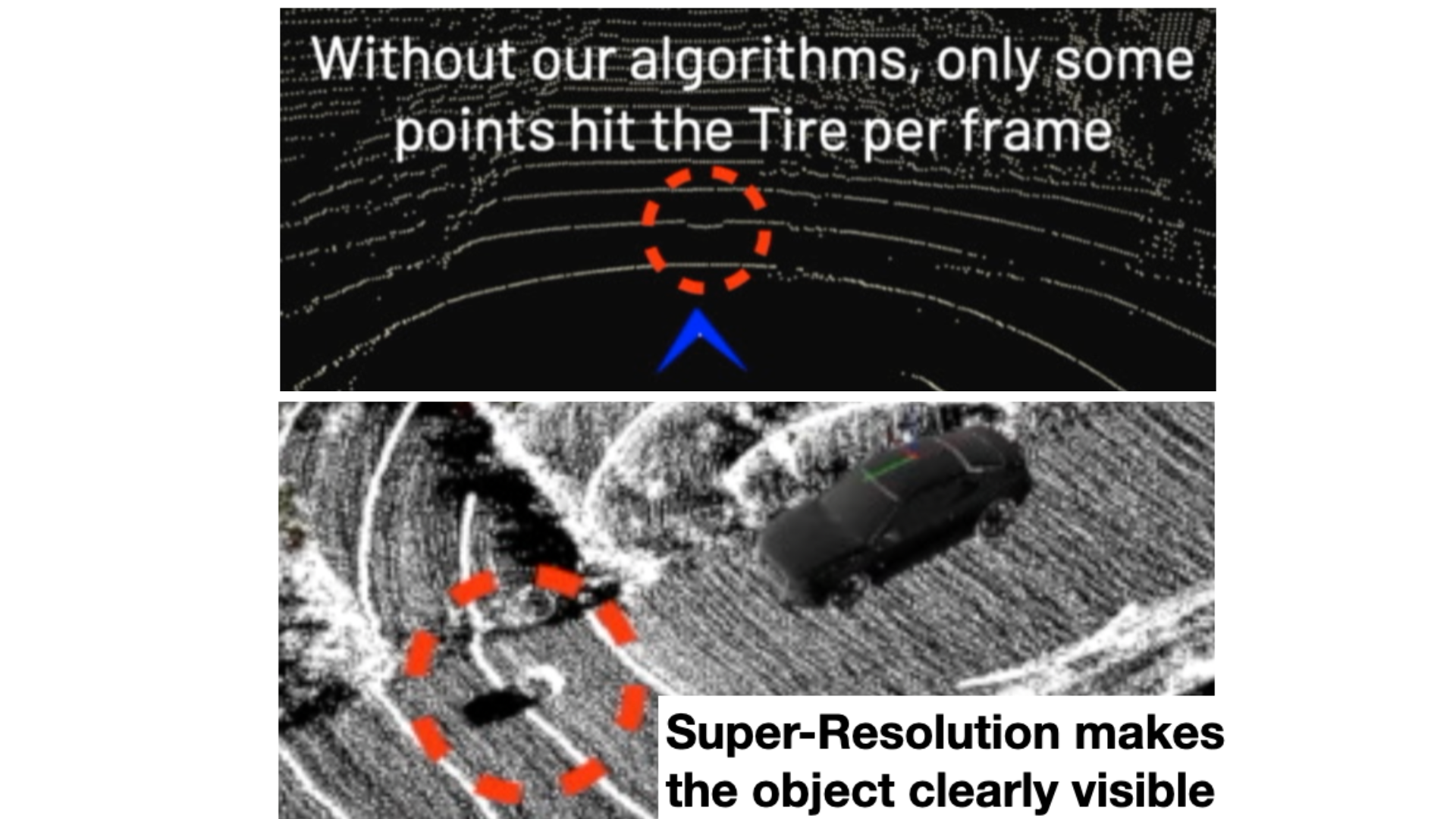

In a typical driving situation you want to detect debris such as a tire.

Because it's a light-absorbing black surface and relatively small in size, the number of Laser hits impacting the object will be very low and even less those getting back to the receiver, even with the highest resolution LiDAR.

Applying Super-Resolution to Obstacle Perception

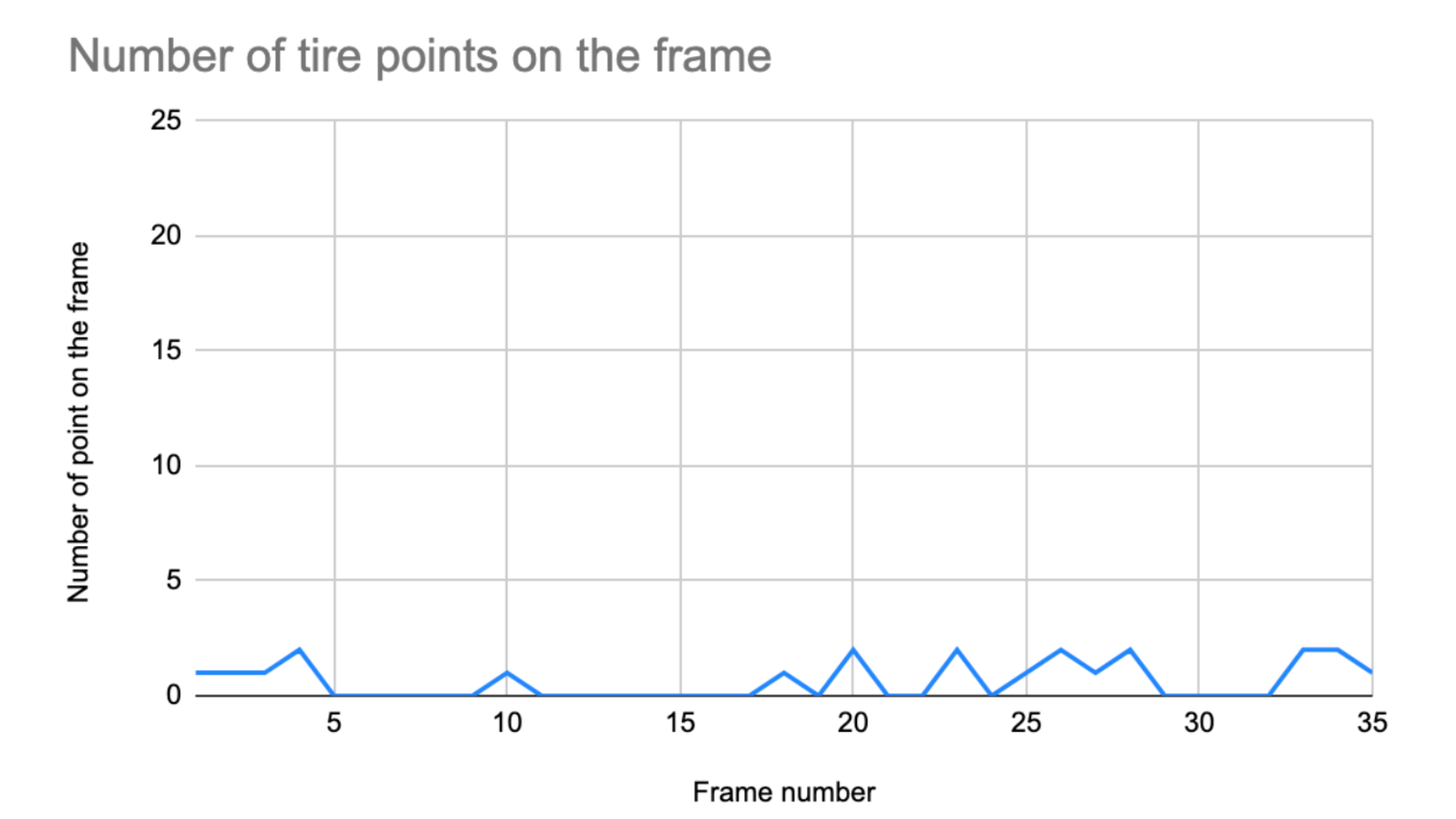

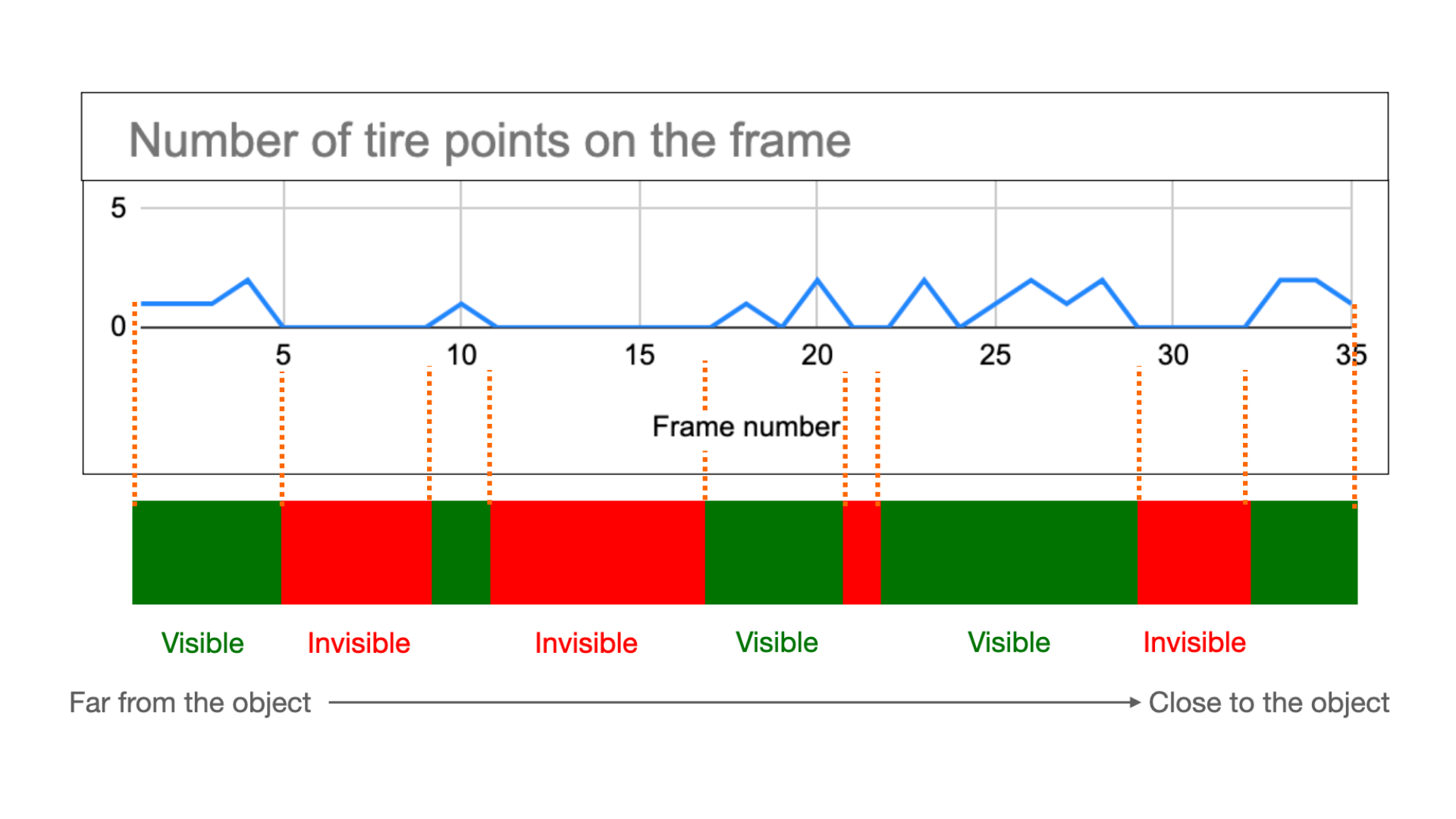

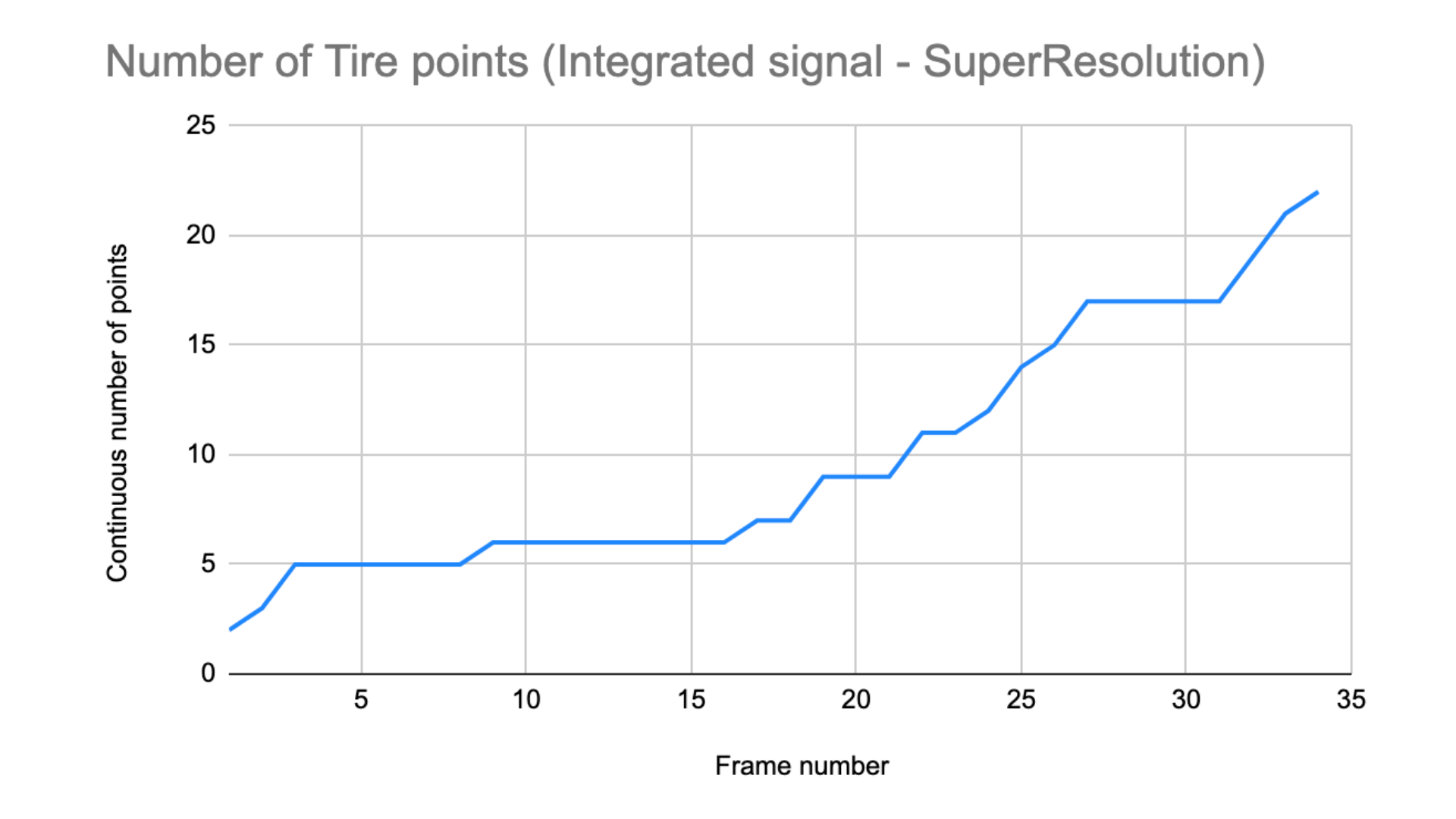

In this practical experience we used a well-known mechanical 360ºFoV LiDAR, the number of points belonging to the object and getting back to the sensor are shown in the chart below, for each frame:

As you can see, not a single frame delivers more than 3 points on the object (signal).

This is challenging even for the best Object Detection algorithms, especially if you take into account that irregularities on the road (noise) and the side walk edge (more noise).

Look at how close the points of the tire look like vs. the environment:

In fact, the image above is even a favourable case: if you look closely at the chart you'll see that in some frames the object does not appear at all - it becomes invisible!

That means that the challenge, for any object detection algorithm using this data as an input, is even harder - these few points appearing and disappearing will very likely filtered out as being noise.

To be fair, the fact that even close to the object there are some frames with no points is in this case related to the repetitive scanning method of the LiDAR that was used and won't be the case with other kind of LiDARs, but this doesn't change the fact that a single frame is by definition limited by the number of points per frame.

Now, let's cumulate frames over time (aka Integrating the signal).

Thanks to the SLAM algorithm, we can understand how each frame is positioned and oriented in relation to the previous ones, so we can build a live (real-time) 3D map that increases the actual resolution (ie. how many points of the object are detected).

In the same situation, same recording and sensor, the available data increases with the past observations:

This is no magic (and no interpolation: all the information is actual laser hits), it's just simply applying a memory of past points that help understanding the present perception:

Increasing Resolution with Outsight's Software

Objects that were previously invisible become visible:

How it works

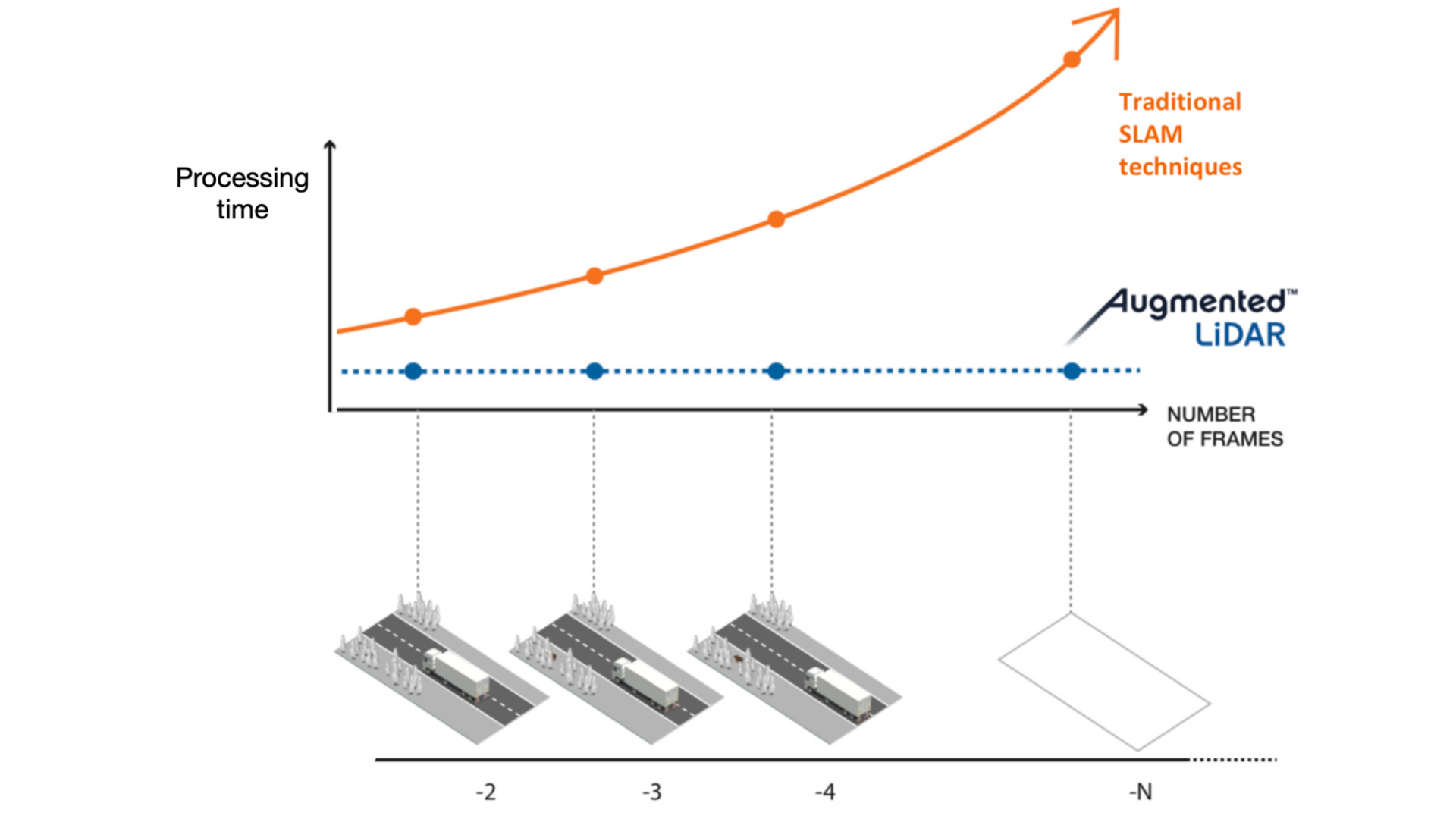

The basic algorithm enabling Super-resolution is SLAM, but there are many different approaches, most of them requiring high-end computing power and are fragile in challenging dynamic environments.

As pioneers of LiDAR SLAM with more than 70 patent filings, our team at Outsight has validated our unique approach in dozens of different contexts and situations, using low processing power (ARM-based SoC CPU).

This is possible thanks to, among other things, a one-of-its-kind algorithm, that we will describe in another article.

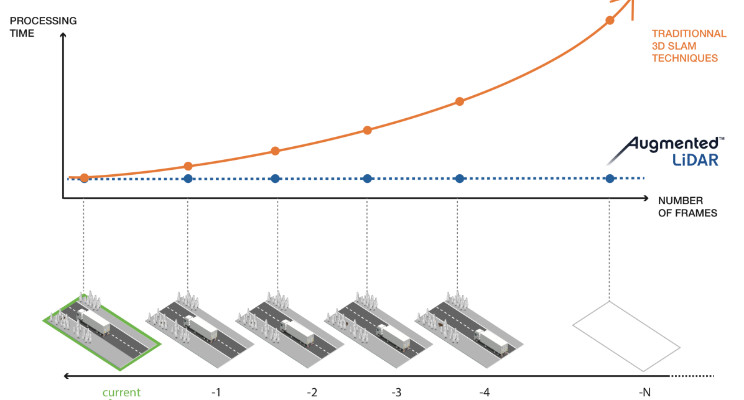

One of the key points is that its processing time is de-correlated with the number of past frames being used to compute the position and orientation:

This is no magic neither, those of you that were following the company Dibotics, now called Outsight, have probably attended one of our many presentations in international conferences or read one of these articles published many years ago:

The hardware and software flywheel

With LiDAR becoming an affordable piece of hardware, any company can start using it, without needing to become a LiDAR expert, thanks to the appropriate real-time pre-processing software.

3D Super-Resolution is an excellent example of how software can sublimate hardware capabilities, resulting in even better data for software to process.